Reinforcement Learning (RL) has emerged as a pivotal branch of machine learning and artificial intelligence, playing a key role in advancing Industrial Revolution (IR) 4.0 technologies. The roots of RL can be traced back to the late 1970s when researchers at the University of Massachusetts began exploring the concept of learning through interaction.

Unlike traditional supervised learning, where models are trained on labeled datasets, RL emphasizes a trial-and-error approach, allowing agents to learn optimal behaviors by receiving feedback in the form of rewards or penalties based on their actions. This unique framework positions RL as a powerful tool for solving complex problems that can be framed as Markov Decision Processes (MDPs), where the goal is to maximize cumulative rewards over time.

As we delve deeper into the key concepts, algorithms, and applications of reinforcement learning, it becomes evident that this field not only enhances our understanding of intelligent behavior but also paves the way for innovative solutions to real-world challenges.

Reinforcement Learning (RL) is built upon several foundational concepts that are crucial for understanding how agents learn to make decisions. At the heart of RL is the ‘agent,’ which refers to the learner or decision-maker that interacts with the environment. The ‘environment’ encompasses everything the agent can interact with, including the rules and dynamics that govern its behavior. The ‘state’ represents the current situation of the agent within the environment, while ‘actions’ are the choices available to the agent at any given state. Finally, the ‘reward’ is a feedback signal that the agent receives after taking an action, guiding it toward optimal behavior by reinforcing desirable actions and discouraging undesirable ones.

These key concepts form the backbone of RL, allowing for a structured approach to decision-making. The agent’s goal is to learn an ‘optimal policy,’ which is a strategy that maximizes the cumulative reward over time. This learning process is often characterized by trial and error, where the agent explores different actions and learns from the consequences. The balance between exploration (trying new actions) and exploitation (choosing known rewarding actions) is a critical aspect of RL, influencing how effectively the agent can learn from its environment.

Reinforcement learning (RL) algorithms are pivotal in enabling agents to learn optimal behaviors through interactions with their environment. These algorithms can be broadly categorized into three main types: model-free methods, model-based methods, and policy gradient methods.

Model-free methods, such as Q-learning and SARSA, focus on learning the value of actions directly from the environment without requiring a model of the environment’s dynamics. In contrast, model-based methods involve creating a model of the environment, allowing the agent to simulate and plan its actions before executing them, which can lead to more efficient learning in complex scenarios. Policy gradient methods, another significant category, optimize the policy directly by adjusting the parameters of the policy function based on the received rewards. This approach is particularly useful in high-dimensional action spaces where traditional value-based methods may struggle.

Each of these algorithm types has its own strengths and weaknesses, making the choice of algorithm crucial depending on the specific application and environment. For instance, while model-free methods are often simpler to implement, they may require extensive exploration and can be less sample efficient compared to model-based approaches. Understanding these trade-offs is essential for practitioners and researchers to select the most appropriate algorithm for their reinforcement learning tasks.

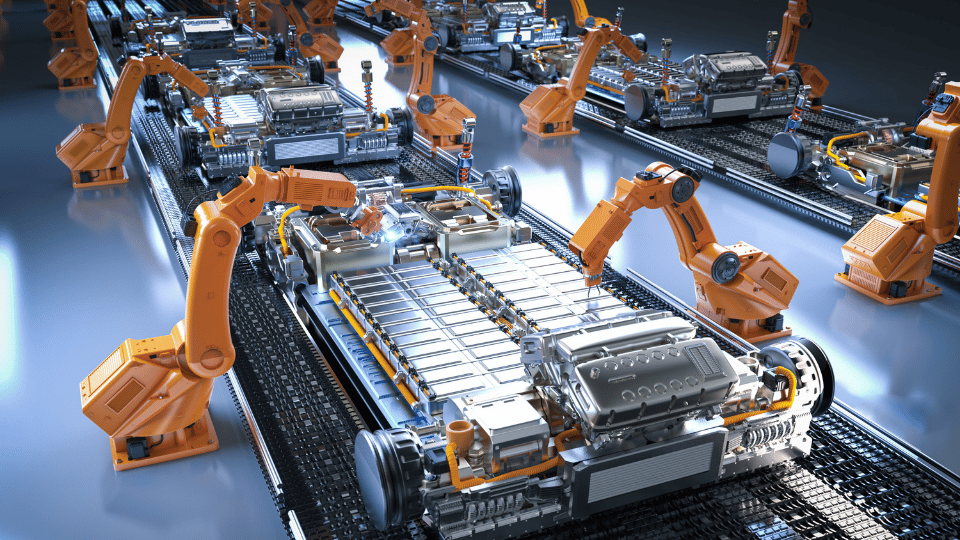

One of the most notable applications of Reinforcement learning (RL) is in robotics, where RL algorithms enable machines to learn from their environment and improve their performance over time. For instance, robots can be trained to navigate through obstacles or perform intricate tasks, such as assembly line operations, by receiving feedback from their actions. This capability not only enhances efficiency but also reduces the need for extensive programming, allowing for more adaptive and intelligent robotic systems.

In addition to robotics, reinforcement learning is making significant strides in the finance sector. Financial institutions leverage RL for algorithmic trading, where agents learn to make investment decisions based on market conditions and historical data. By continuously optimizing their strategies through trial and error, these algorithms can potentially yield higher returns while managing risks effectively.

Another prominent application of reinforcement learning is in the realm of gaming and strategic decision-making. Chess engines, for instance, leverage RL to evaluate potential moves and develop strategies that can outsmart human players. This application not only highlights the algorithm’s capability to learn from vast amounts of data but also its potential to solve complex problems in various fields.

Next, RL is also applied in marketing, where it personalizes user experiences by analyzing consumer behavior and optimizing recommendations, thus driving engagement and sales.

Additionally, in the realm of natural language processing (NLP), RL is utilized for tasks such as text summarization and machine translation, enhancing the quality and relevance of generated content.

One of the most significant issues in Reinforcement learning (RL) is the exponential growth of state and action spaces as the complexity of problems increases. This phenomenon can lead to a situation where the algorithm struggles to explore and learn effectively, resulting in inefficient sample usage. Consequently, RL algorithms often require vast amounts of data to achieve satisfactory performance, which can be a considerable drawback, especially in environments where data collection is expensive or time-consuming.

Another critical challenge in RL is the problem of transfer learning. Many RL algorithms find it difficult to transfer knowledge gained from one task or environment to another, limiting their adaptability and generalization capabilities. This lack of transferability can lead to redundant learning processes, where the algorithm must start from scratch for each new task.

Additionally, the issue of explainability looms large in RL, as the decision-making processes of these algorithms can be opaque, making it challenging for practitioners to understand and trust their outputs. Addressing these challenges is essential for the broader adoption and success of reinforcement learning in real-world applications.

Reinforcement learning is a pivotal AI approach, learning through trial and error to solve problems in robotics, gaming, and beyond. Despite challenges like high computational demands and fine-tuning difficulties, advancements such as deep reinforcement learning and multi-agent systems are driving its growth. By addressing limitations and integrating with other AI paradigms, reinforcement learning holds great promise for transformative applications across industries.